Project Pinhole - Selling things via Image

Project Pinhole is an initiative at Trade Me started to leverage our data for creating great experiences based around a users camera.

A camera, in its most basic incarnation can be made with just a pinhole in the side of a box. It’s such a simple concept, yet kickstarted the entire field of photography. Nowadays, everything has a camera — most smartphones have at least two. What they do no longer seems revolutionary, as we’re pretty used to it. In the same way a pinhole kickstarted modern day photography, a smartphone camera could contain a huge amount of potential for new technologies. Combined with emerging new machine learning based tech, amazing things can be done with just an image.

What is Project Pinhole

Project Pinhole is an attempt to make a smartphone camera more useful by leveraging Trade Me’s data and the users device to create a great selling experience. It went from inception to delivery within the space of a few months, and has succeeded in combining a number of different machine learning techniques into one easy to use Android App.

This post won’t cover everything. Instead, a series blog posts will detail the development of Pinhole by Trade Me — an Android Application which allows users to list items on Trade Me without using a keyboard. This instalment will focus on the background, and how Project Pinhole came to exist, followed by technical posts describing the journey to build the final application.

Part 1 — Beginnings

Taking a step back, Trade Me originated as a platform for Kiwis to sell things to one-another. Over the years both people and technology have changed. In recent years we’ve seen dramatic improvements in machine learning techniques, resulting in some pretty cool experiences like finding pictures of your pet by name and medical software which can predict heart disease by looking at your eyes. Machine learning is heavily data-driven, and at Trade Me we happen to have a lot of data. It wasn’t long before someone realised data could be used more proactively to build great experiences for our users.

A small team of us in the mobile space sought to find an area of Trade Me which could benefit from a new data-driven experience, settling on the idea of creating an image-based method of listing items on the site. Over the course of 4 months we designed and built not only an application, but the entire machine learning pipeline which allows this all to be possible. The application we ended up with allows anyone to take a picture of something they want to sell, dictate a description, and let us fill in the blanks. If it all goes well you can have a listing on Trade Me twenty seconds after taking an image.

What came first

We tried out a number of projects in research and development time prior to the inception of Project Pinhole. These projects experimented with image related data we have, trying to do things like inference of the category from a product in an image, or use generative models to attempt to produce a title. These one-day projects helped influence the decisions we made when starting Pinhole in terms of figuring out what we should do, and what needs to be done better.

One of the early RnD projects put together demonstrates how we tried category inference to make the process of listing items faster. This uses a simple on-device model, and a basic price suggestion framework to try and populate information about the item.

From playing around with a sample of that project above, we found it helped out a lot when selling things, but only when the model got it right. From a users perspective a blatantly wrong category felt like either we’d taken a bad picture, or the technology underpinning it all was poorly put together. That’s not a good experience for the user, and it’s also not a good experience for us as it might lead to poor retention. Luckily we know the confidence the model has in what it predicts, and with smart design consideration can decide whether or not we want to show the user our predictions.

All in all we had separately developed the following frameworks in one way or another before starting out on the Pinhole journey:

- Image to Category suggestions

- Image to Title

- Title and category to price suggestions

- Image to Attribute (things like colour, brand etc.)

Project Inception

We had a bunch of models specialising in generating suggestions for different fields in the sell your item form, and we needed a way of consolidating them all. With a challenge posed to us of using our data to create a great experience, we decided to draw up some designs for an app which uses the camera in an experimental way. The Crazy 8’s design sprint method worked well for brainstorming, leaving us with a bunch of ideas, including selling things without a keyboard among others.

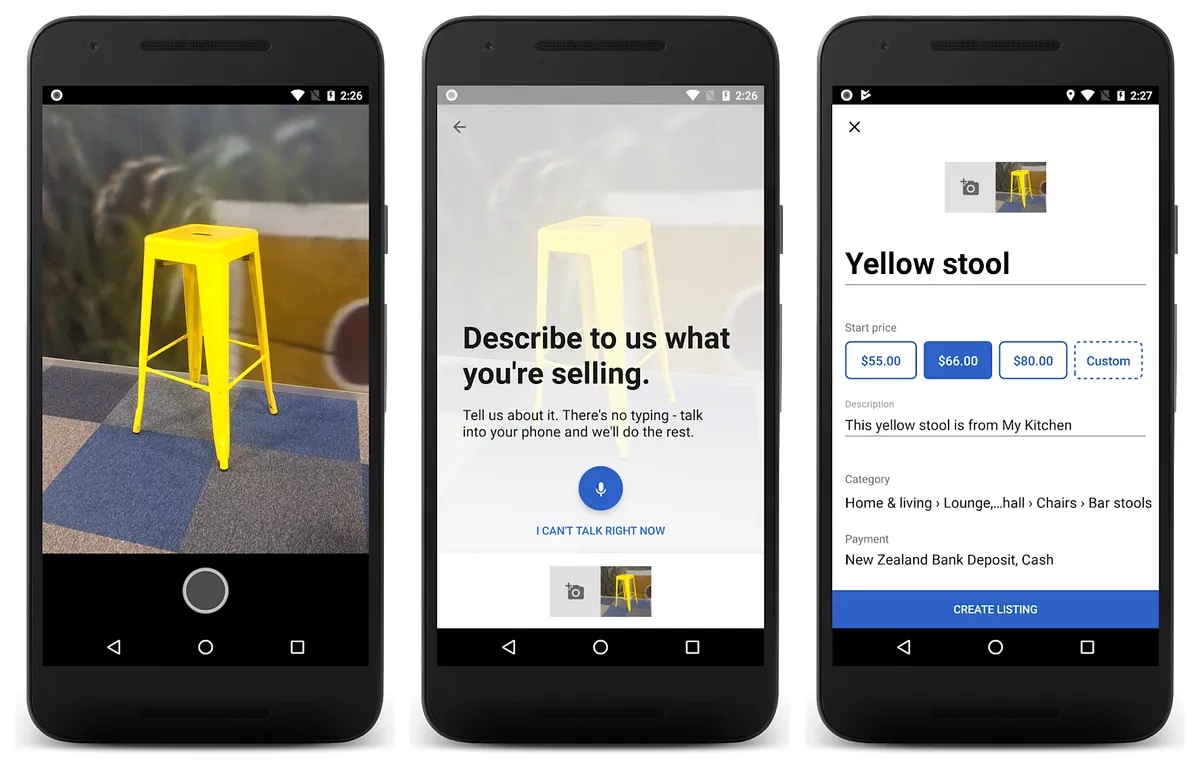

The final design we settled on was something similar in a sense to that one-day category inference project. It allows a user to list an item on Trade Me without using a keyboard, leveraging speech and image recognition to fill out the entire listing. The design was ambitious, and attempted to use as many models as possible to predict and suggest everything needed to sell an item.

We ended up with an Android application which could create entire listings from a single photo. The built in microphone allows you to dictate your description, and our image models do the rest.

The goal of Project Pinhole was to create an experience that would help Trade Me users sell things in a less stressful way. We’re still gathering feedback, but from using it myself to clear out the garage I’d say it’s a success.

If you’d like to try it out, Pinhole by Trade Me is available on the Google Play Store.